Any logical expression can be used as an index which opens a wide

range of possibilities. For example, you can remove or focus on

entries that match specific criteria. For example, you might want to

remove all entries that are above a certain value:

For another example, suppose you want to join together the values that

match two different factors in another vector:

Note that a single ‘|’ was used in the previous example. There is a

difference between ‘||’ and ‘|’. A single bar will perform a vector

operation, term by term, while a double bar will evaluate to a single

TRUE or FALSE result:

Here we look at the most basic linear least squares regression. The

main purpose is to provide an example of the basic commands. It is

assumed that you know how to enter data or read data files which is

covered in the first chapter, and it is assumed that you are familiar

with the different data types.

We will examine the interest rate for four year car loans, and the

data that we use comes from the

U.S. Federal Reserve’s mean rates .

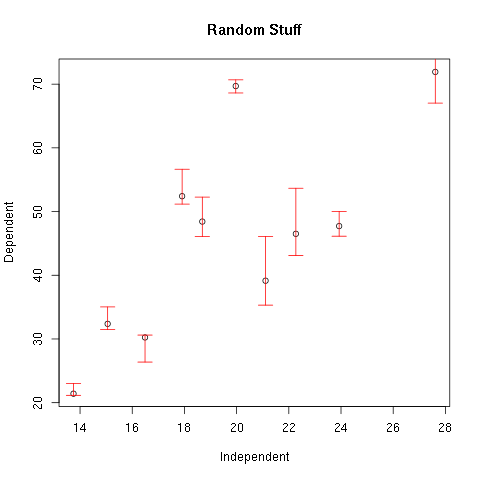

We are looking at and plotting means. This, of course, is a very bad

thing because it removes a lot of the variance and is misleading. The

only reason that we are working with the data in this way is to

provide an example of linear regression that does not use too many

data points. Do not try this without a professional near you, and if a

professional is not near you do not tell anybody you did this. They

will laugh at you. People are mean, especially professionals.

The first thing to do is to specify the data. Here there are only five

pairs of numbers so we can enter them in manually. Each of the five

pairs consists of a year and the mean interest rate:

The next thing we do is take a look at the data. We first plot the

data using a scatter plot and notice that it looks linear. To confirm

our suspicions we then find the correlation between the year and the

mean interest rates:

> plot(year,rate,

main="Commercial Banks Interest Rate for 4 Year Car Loan",

sub="http://www.federalreserve.gov/releases/g19/20050805/")

> cor(year,rate)

[1] -0.9880813

exercise:

year <- c(2000 , 2001 , 2002 , 2003 , 2004)

rate <- c(9.34 , 8.50 , 7.62 , 6.93 , 6.60)

plot(year,rate,

main="Commercial Banks Interest Rate for 4 Year Car Loan",

sub="http://www.federalreserve.gov/releases/g19/20050805/")

cor(year,rate)

fit <- lm(rate ~ year)

fit

attributes(fit)

names(fit)

length(fit)

fit$coefficients[[2]]*2015+fit$coefficients[[1]]

At this point we should be excited because associations that strong

never happen in the real world unless you cook the books or work with

averaged data. The next question is what straight line comes “closest”

to the data? In this case we will use least squares regression as one

way to determine the line.

Before we can find the least square regression line we have to make

some decisions. First we have to decide which is the explanatory and

which is the response variable. Here, we arbitrarily pick the

explanatory variable to be the year, and the response variable is the

interest rate. This was chosen because it seems like the interest rate

might change in time rather than time changing as the interest rate

changes. (We could be wrong, finance is very confusing.)

The command to perform the least square regression is the lm

command. The command has many options, but we will keep it simple and

not explore them here. If you are interested use the help(lm) command

to learn more. Instead the only option we examine is the one necessary

argument which specifies the relationship.

Since we specified that the interest rate is the response variable and

the year is the explanatory variable this means that the regression

line can be written in slope-intercept form:

\[rate = (slope) year + (intercept)\]

The way that this relationship is defined in the lm command is that

you write the vector containing the response variable, a tilde (“~”),

and a vector containing the explanatory variable:

> fit <- lm(rate ~ year)

> fit

Call:

lm(formula = rate ~ year)

Coefficients:

(Intercept) year

1419.208 -0.705

When you make the call to lm it returns a variable with a lot of

information in it. If you are just learning about least squares

regression you are probably only interested in two things at this

point, the slope and the y-intercept. If you just type the name of the

variable returned by lm it will print out this minimal information to

the screen. (See above.)

If you would like to know what else is stored in the variable you can

use the attributes command:

> attributes(fit)

$names

[1] "coefficients" "residuals" "effects" "rank"

[5] "fitted.values" "assign" "qr" "df.residual"

[9] "xlevels" "call" "terms" "model"

$class

[1] "lm"

One of the things you should notice is the coefficients variable

within fit. You can print out the y-intercept and slope by accessing

this part of the variable:

> fit$coefficients[1]

(Intercept)

1419.208

> fit$coefficients[[1]]

[1] 1419.208

> fit$coefficients[2]

year

-0.705

> fit$coefficients[[2]]

[1] -0.705

Note that if you just want to get the number you should use two square

braces. So if you want to get an estimate of the interest rate in the

year 2015 you can use the formula for a line:

> fit$coefficients[[2]]*2015+fit$coefficients[[1]]

[1] -1.367

So if you just wait long enough, the banks will pay you to take a car!

A better use for this formula would be to calculate the residuals and

plot them:

> res <- rate - (fit$coefficients[[2]]*year+fit$coefficients[[1]])

> res

[1] 0.132 -0.003 -0.178 -0.163 0.212

> plot(year,res)

That is a bit messy, but fortunately there are easier ways to get the

residuals. Two other ways are shown below:

> residuals(fit)

1 2 3 4 5

0.132 -0.003 -0.178 -0.163 0.212

> fit$residuals

1 2 3 4 5

0.132 -0.003 -0.178 -0.163 0.212

> plot(year,fit$residuals)

>

If you want to plot the regression line on the same plot as your

scatter plot you can use the abline function along with your variable

fit:

> plot(year,rate,

main="Commercial Banks Interest Rate for 4 Year Car Loan",

sub="http://www.federalreserve.gov/releases/g19/20050805/&quo, .toc-backreft;)

> abline(fit)

Finally, as a teaser for the kinds of analyses you might see later,

you can get the results of an F-test by asking R for a summary of the

fit variable:

> summary(fit)

Call:

lm(formula = rate ~ year)

Residuals:

1 2 3 4 5

0.132 -0.003 -0.178 -0.163 0.212

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 1419.20800 126.94957 11.18 0.00153 **

year -0.70500 0.06341 -11.12 0.00156 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.2005 on 3 degrees of freedom

Multiple R-Squared: 0.9763, Adjusted R-squared: 0.9684

F-statistic: 123.6 on 1 and 3 DF, p-value: 0.001559

9. Calculating Confidence Intervals

Here we look at some examples of calculating confidence intervals. The

examples are for both normal and t distributions. We assume that you

can enter data and know the commands associated with basic

probability. Note that an easier way to calculate confidence intervals

using the t.test command is discussed in section The Easy Way.

Here we will look at a fictitious example. We will make some

assumptions for what we might find in an experiment and find the

resulting confidence interval using a normal distribution. Here we

assume that the sample mean is 5, the standard deviation is 2, and the

sample size is 20. In the example below we will use a 95% confidence

level and wish to find the confidence interval. The commands to find

the confidence interval in R are the following:

> a <- 5

> s <- 2

> n <- 20

> error <- qnorm(0.975)*s/sqrt(n)

> left <- a-error

> right <- a+error

> left

[1] 4.123477

> right

[1] 5.876523

Our level of certainty about the true mean is 95% in predicting that the

true mean is within the interval

between 4.12 and 5.88 assuming that the original random variable is

normally distributed, and the samples are independent.

Calculating the confidence interval when using a t-test is similar to

using a normal distribution. The only difference is that we use the

command associated with the t-distribution rather than the normal

distribution. Here we repeat the procedures above, but we will assume

that we are working with a sample standard deviation rather than an

exact standard deviation.

Again we assume that the sample mean is 5, the sample standard

deviation is 2, and the sample size is 20. We use a 95% confidence

level and wish to find the confidence interval. The commands to find

the confidence interval in R are the following:

> a <- 5

> s <- 2

> n <- 20

> error <- qt(0.975,df=n-1)*s/sqrt(n)

> left <- a-error

> right <- a+error

> left

[1] 4.063971

> right

[1] 5.936029

The true mean has a probability of 95% of being in the interval

between 4.06 and 5.94 assuming that the original random variable is

normally distributed, and the samples are independent.

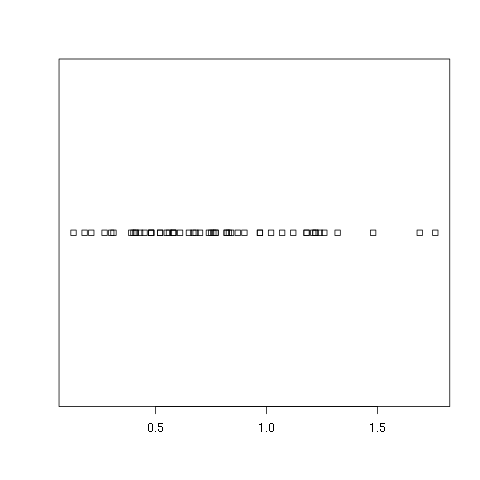

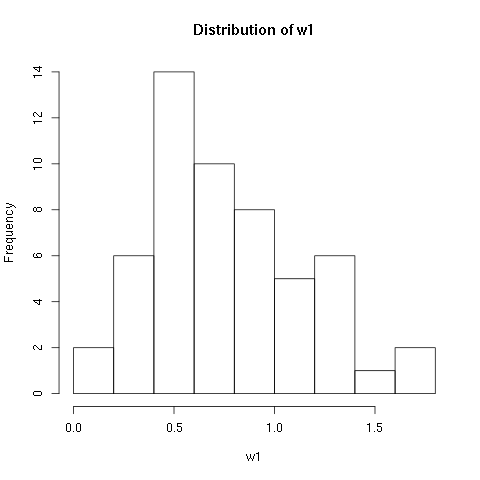

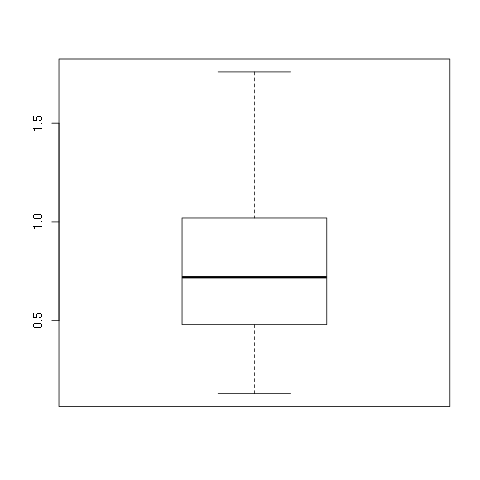

We now look at an example where we have a univariate data set and want

to find the 95% confidence interval for the mean. In this example we

use one of the data sets given in the data input chapter. We use the

w1.dat data set:

> w1 <- read.csv(file="w1.dat",sep=",",head=TRUE)

, .toc-backref> summary(w1)

vals

Min. :0.130

1st Qu.:0.480

Median :0.720

Mean :0.765

3rd Qu.:1.008

Max. :1.760

> length(w1$vals)

[1] 54

> mean(w1$vals)

[1] 0.765

> sd(w1$vals)

[1] 0.3781222

We can now calculate an error for the mean:

> error <- qt(0.975,df=length(w1$vals)-1)*sd(w1$vals)/sqrt(length(w1$vals))

> error

[1] 0.1032075

The confidence interval is found by adding and subtracting the error

from the mean:

> left <- mean(w1$vals)-error

> right <- mean(w1$vals)+error

> left

[1] 0.6617925

> right

[1] 0.8682075

There is a 95% probability that the true mean is between 0.66 and 0.87

assuming that the original random variable is normally distributed,

and the samples are independent.

Suppose that you want to find the confidence intervals for many

tests. This is a common task and most software packages will allow you

to do this.

We have three different sets of results:

| Comparison 1 |

|

|

| |

Mean |

Std. Dev. |

Number (pop.) |

| Group I |

10 |

3 |

300 |

| Group II |

10.5 |

2.5 |

230 |

| Comparison 2 |

|

|

| |

Mean |

Std. Dev. |

Number (pop.) |

| Group I |

12 |

4 |

210 |

| Group II |

13 |

5.3 |

340 |

| Comparison 3 |

|

|

| |

Mean |

Std. Dev. |

Number (pop.) |

| Group I |

30 |

4.5 |

420 |

| Group II |

28.5 |

3 |

400 |

For each of these comparisons we want to calculate the associated

confidence interval for the difference of the means. For each

comparison there are two groups. We will refer to group one as the

group whose results are in the first row of each comparison above. We

will refer to group two as the group whose results are in the second

row of each comparison above. Before we can do that we must first

compute a standard error and a t-score. We will find general formulae

which is necessary in order to do all three calculations at once.

We assume that the means for the first group are defined in a variable

called m1. The means for the second group are defined in a variable

called m2. The standard deviations for the first group are in a

variable called sd1. The standard deviations for the second group

are in a variable called sd2. The number of samples for the first

group are in a variable called num1. Finally, the number of samples

for the second group are in a variable called num2.

With these definitions the standard error is the square root of

(sd1^2)/num1+(sd2^2)/num2. The R commands to do this can be found

below:

> m1 <- c(10,12,30)

> m2 <- c(10.5,13,28.5)

> sd1 <- c(3,4,4.5)

> sd2 <- c(2.5,5.3,3)

> num1 <- c(300,210,420)

> num2 <- c(230,340,400)

> se <- sqrt(sd1*sd1/num1+sd2*sd2/num2)

> error <- qt(0.975,df=pmin(num1,num2)-1)*se

To see the values just type in the variable name on a line alone:

> m1

[1] 10 12 30

> m2

[1] 10.5 13.0 28.5

> sd1

[1] 3.0 4.0 4.5

> sd2

[1] 2.5 5.3 3.0

> num1

[1] 300 210 420

> num2

[1] 230 340 400

> se

[1] 0.2391107 0.3985074 0.2659216

> error

[1] 0.4711382 0.7856092 0.5227825

Now we need to define the confidence interval around the assumed

differences. Just as in the case of finding the p values in previous

chapter we have to use the pmin command to get the number of degrees

of freedom. In this case the null hypotheses are for a difference of

zero, and we use a 95% confidence interval:

> left <- (m1-m2)-error

> right <- (m1-m2)+error

> left

[1] -0.9711382 -1.7856092 0.9772175

> right

[1] -0.02886177 -0.21439076 2.02278249

This gives the confidence intervals for each of the three tests. For

example, in the first experiment the 95% confidence interval is

between -0.97 and -0.03 assuming that the random variables are

normally distributed, and the samples are independent.

10. Calculating p Values

Here we look at some examples of calculating p values. The examples

are for both normal and t distributions. We assume that you can enter

data and know the commands associated with basic probability. We first

show how to do the calculations the hard way and show how to do the

calculations. The last method makes use of the t.test command and

demonstrates an easier way to calculate a p value.

We look at the steps necessary to calculate the p value for a

particular test. In the interest of simplicity we only look at a two

sided test, and we focus on one example. Here we want to show that the

mean is not close to a fixed value, a.

\[\begin{split}H_o: \mu_x & = & a,\end{split}\]\[\begin{split}H_a: \mu_x & \neq & a,\end{split}\]

The p value is calculated for a particular sample mean. Here we assume

that we obtained a sample mean, x and want to find its p value. It is

the probability that we would obtain a given sample mean that is

greater than the absolute value of its Z-score or less than the

negative of the absolute value of its Z-score.

For the special case of a normal distribution we also need the

standard deviation. We will assume that we are given the standard

deviation and call it s. The calculation for the p value can be done

in several of ways. We will look at two ways here. The first way is to

convert the sample means to their associated Z-score. The other way is

to simply specify the standard deviation and let the computer do the

conversion. At first glance it may seem like a no brainer, and we

should just use the second method. Unfortunately, when using the

t-distribution we need to convert to the t-score, so it is a good idea

to know both ways.

We first look at how to calculate the p value using the Z-score. The

Z-score is found by assuming that the null hypothesis is true,

subtracting the assumed mean, and dividing by the theoretical standard

deviation. Once t, .toc-backrefhe Z-score is found the probability that the value

could be less the Z-score is found using the pnorm command.

This is not enough to get the p value. If the Z-score that is found is

positive then we need to take one minus the associated

probability. Also, for a two sided test we need to multiply the result

by two. Here we avoid these issues and insure that the Z-score is

negative by taking the negative of the absolute value.

We now look at a specific example. In the example below we will use a

value of a of 5, a standard deviation of 2, and a sample size

of 20. We then find the p value for a sample mean of 7:

> a <- 5

> s <- 2

> n <- 20

> xbar <- 7

> z <- (xbar-a)/(s/sqrt(n))

> z

[1] 4.472136

> 2*pnorm(-abs(z))

[1] 7.744216e-06

We now look at the same problem only specifying the mean and standard

deviation within the pnorm command. Note that for this case we cannot

so easily force the use of the left tail. Since the sample mean is

more than the assumed mean we have to take two times one minus the

probability:

> a <- 5

> s <- 2

> n <- 20

> xbar <- 7

> 2*(1-pnorm(xbar,mean=a,sd=s/sqrt(20)))

[1] 7.744216e-06

Finding the p value using a t distribution is very similar to using

the Z-score as demonstrated above. The only difference is that you

have to specify the number of degrees of freedom. Here we look at the

same example as above but use the t distribution instead:

> a <- 5

> s <- 2

> n <- 20

> xbar <- 7

> t <- (xbar-a)/(s/sqrt(n))

> t

[1] 4.472136

> 2*pt(-abs(t),df=n-1)

[1] 0.0002611934

We now look at an example where we have a univariate data set and want

to find the p value. In this example we use one of the data sets given

in the data input chapter. We use the w1.dat data set:

> w1 <- read.csv(file="w1.dat",sep=",",head=TRUE)

> summary(w1)

vals

Min. :0.130

1st Qu.:0.480

Median :0.720

Mean :0.765

3rd Qu.:1.008

Max. :1.760

> length(w1$vals)

[1] 54

Here we use a two sided hypothesis test,

\[\begin{split}H_o: \mu_x & = & 0.7,\end{split}\]\[\begin{split}H_a: \mu_x & \neq & 0.7.\end{split}\]

So we calculate the sample mean and sample standard deviation in order

to calculate the p value:

> t <- (mean(w1$vals)-0.7)/(sd(w1$vals)/sqrt(length(w1$vals)))

> t

[1] 1.263217

> 2*pt(-abs(t),df=length(w1$vals)-1)

[1] 0.21204

Suppose that you want to find the p values for many tests. This is a

common task and most software packages will allow you to do this. Here

we see how it can be done in R.

Here we assume that we want to do a one-sided hypothesis test for a

number of comparisons. In particular we will look at three hypothesis

tests. All are of the following form:

\[\begin{split}H_o: \mu_1 - \mu_2 & = & 0,\end{split}\]\[\begin{split}H_a: \mu_1 - \mu_2 & \neq & 0.\end{split}\]

We have three different sets of comparisons to make:

| Comparison |

1 |

|

|

| |

Mean |

Std. Dev. |

Number

(pop.) |

| Group I |

10 |

3 |

300 |

| Group II |

10.5 |

2.5 |

230 |

| Comparison |

2 |

|

|

| |

Mean |

Std. Dev. |

Number

(pop.) |

| Group I |

12 |

4 |

210 |

| Group II |

13 |

5.3 |

340 |

| Comparison |

3 |

|

|

| |

Mean |

Std. Dev. |

Number

(pop.) |

| Group I |

30 |

4.5 |

420 |

| Group II |

28.5 |

3 |

400 |

For each of these comparisons we want to calculate a p value. For each

comparison there are two groups. We will refer to group one as the

group whose results are in the first row of each comparison above. We

will refer to group two as the group whose results are in the second

row of each comparison above. Before we can do that we must first

compute a standard error and a t-score. We will find general formulae

which is necessary in order to do all three calculations at once.

We assume that the means for the first group are defined in a variable

called m1. The means for the second group are defined in a variable

called m2. The standard deviations for the first group are in a

variable called sd1. The standard deviations for the second group are

in a variable called sd2. The number of samples for the first group

are in a variable called num1. Finally, the number of samples for the

second group are in a variable called num2.

With these definitions the standard error is the square root of

(sd1^2)/num1+(sd2^2)/num2. The associated t-score is m1 minus m2

all divided by the standard error. The R comands to do this can be

found below:

> m1 <- c(10,12,30)

> m2 <- c(10.5,13,28.5)

> sd1 <- c(3,4,4.5)

> sd2 <- c(2.5,5.3,3)

> num1 <- c(300,210,420)

> num2 <- c(230,340,400)

> se <- sqrt(sd1*sd1/num1+sd2*sd2/num2)

> t <- (m1-m2)/se

To see the values just type in the vari, .toc-backrefable name on a line alone:

> m1

[1] 10 12 30

> m2

[1] 10.5 13.0 28.5

> sd1

[1] 3.0 4.0 4.5

> sd2

[1] 2.5 5.3 3.0

> num1

[1] 300 210 420

> num2

[1] 230 340 400

> se

[1] 0.2391107 0.3985074 0.2659216

> t

[1] -2.091082 -2.509364 5.640761

To use the pt command we need to specify the number of degrees of

freedom. This can be done using the pmin command. Note that there is

also a command called min, but it does not work the same way. You

need to use pmin to get the correct results. The numbers of degrees

of freedom are pmin(num1,num2)-1. So the p values can be found

using the following R command:

> pt(t,df=pmin(num1,num2)-1)

[1] 0.01881168 0.00642689 0.99999998

If you enter all of these commands into R you should have noticed that

the last p value is not correct. The pt command gives the probability

that a score is less that the specified t. The t-score for the last

entry is positive, and we want the probability that a t-score is

bigger. One way around this is to make sure that all of the t-scores

are negative. You can do this by taking the negative of the absolute

value of the t-scores:

> pt(-abs(t),df=pmin(num1,num2)-1)

[1] 1.881168e-02 6.426890e-03 1.605968e-08

The results from the command above should give you the p values for a

one-sided test. It is left as an exercise how to find the p values for

a two-sided test.

The methods above demonstrate how to calculate the p values directly

making use of the standard formulae. There is another, more direct way

to do this using the t.test command. The t.test command takes a

data set for an argument, and the default operation is to perform a

two sided hypothesis test.

> x = c(9.0,9.5,9.6,10.2,11.6)

> t.test(x)

One Sample t-test

data: x

t = 22.2937, df = 4, p-value = 2.397e-05

alternative hypothesis: true mean is not equal to 0

95 percent confidence interval:

8.737095 11.222905

sample estimates:

mean of x

9.98

> help(t.test)

>

That was an obvious result. If you want to test against a

different assumed mean then you can use the mu argument:

> x = c(9.0,9.5,9.6,10.2,11.6)

> t.test(x,mu=10)

One Sample t-test

data: x

t = -0.0447, df = 4, p-value = 0.9665

alternative hypothesis: true mean is not equal to 10

95 percent confidence interval:

8.737095 11.222905

sample estimates:

mean of x

9.98

If you are interested in a one sided test then you can specify which

test to employ using the alternative option:

> x = c(9.0,9.5,9.6,10.2,11.6)

> t.test(x,mu=10,alternative="less")

One Sample t-test

data: x

t = -0.0447, df = 4, p-value = 0.4833

alternative hypothesis: true mean is less than 10

95 percent confidence interval:

-Inf 10.93434

sample estimates:

mean of x

9.98

The t.test, .toc-backref() command also accepts a second data set to compare two

sets of samples. The default is to treat them as independent sets, but

there is an option to treat them as dependent data sets. (Enter

help(t.test) for more information.) To test two different samples,

the first two arguments should be the data sets to compare:

> x = c(9.0,9.5,9.6,10.2,11.6)

> y=c(9.9,8.7,9.8,10.5,8.9,8.3,9.8,9.0)

> t.test(x,y)

Welch Two Sample t-test

data: x and y

t = 1.1891, df = 6.78, p-value = 0.2744

alternative hypothesis true difference in means is not equal to 0

95 percent confidence interval:

-0.6185513 1.8535513

sample estimates:

mean of x mean of y

9.9800 9.3625

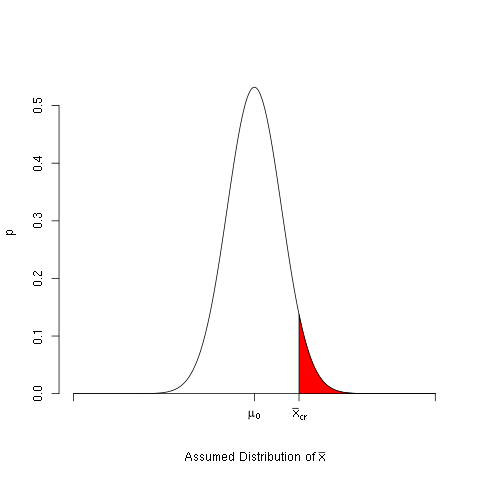

11. Calculating The Power Of A Test

Here we look at some examples of calculating the power of a test. The

examples are for both normal and t distributions. We assume that you

can enter data and know the commands associated with basic

probability. All of the examples here are for a two sided test, and

you can adjust them accordingly for a one sided test.

Here we calculate the power of a test for a normal distribution for a

specific example. Suppose that our hypothesis test is the following:

\[\begin{split}H_o: \mu_x & = & a,\end{split}\]\[\begin{split}H_a: \mu_x & \neq & a,\end{split}\]

The power of a test is the probability that we can the reject null

hypothesis at a given mean that is away from the one specified in the

null hypothesis. We calculate this probability by first calculating

the probability that we accept the null hypothesis when we should

not. This is the probability to make a type II error. The power is the

probability that we do not make a type II error so we then take one

minus the result to get the power.

We can fail to reject the null hypothesis if the sample happens to be

within the confidence interval we find when we assume that the null

hypothesis is true. To get the confidence interval we find the margin

of error and then add and subtract it to the proposed mean, a, to get

the confidence interval. We then turn around and assume instead that

the true mean is at a different, explicitly specified level, and then

find the probability a sample could be found within the original

confidence interval.

In the example below the hypothesis test is for

\[\begin{split}H_o: \mu_x & = & 5,\end{split}\]\[\begin{split}H_a: \mu_x & \neq & 5,\end{split}\]

We will assume that the standard deviation is 2, and the sample size

is 20. In the example below we will use a 95% confidence level and

wish to find the power to detect a true mean that differs from 5 by an

amount of 1.5. (A, .toc-backrefll of these numbers are made up solely for this

example.) The commands to find the confidence interval in R are the

following:

> a <- 5

> s <- 2

> n <- 20

> error <- qnorm(0.975)*s/sqrt(n)

> left <- a-error

> right <- a+error

> left

[1] 4.123477

> right

[1] 5.876523

Next we find the Z-scores for the left and right values assuming that the true mean is 5+1.5=6.5:

> assumed <- a + 1.5

> Zleft <- (left-assumed)/(s/sqrt(n))

> Zright <-(right-assumed)/(s/sqrt(n))

> p <- pnorm(Zright)-pnorm(Zleft)

> p

[1] 0.08163792

The probability that we make a type II error if the true mean is 6.5

is approximately 8.1%. So the power of the test is 1-p:

In this example, the power of the test is approximately 91.8%. If the

true mean differs from 5 by 1.5 then the probability that we will

reject the null hypothesis is approximately 91.8%.

Calculating the power when using a t-test is similar to using a normal

distribution. One difference is that we use the command associated

with the t-distribution rather than the normal distribution. Here we

repeat the test above, but we will assume that we are working with a

sample standard deviation rather than an exact standard deviation. We

will explore three different ways to calculate the power of a

test. The first method makes use of the scheme many books recommend if

you do not have the non-central distribution available. The second

does make use of the non-central distribution, and the third makes use

of a single command that will do a lot of the work for us.

In the example the hypothesis test is the same as above,

\[\begin{split}H_o: \mu_x & = & 5,\end{split}\]\[\begin{split}H_a: \mu_x & \neq & 5,\end{split}\]

Again we assume that the sample standard deviation is 2, and the

sample size is 20. We use a 95% confidence level and wish to find the

power to detect a true mean that differs from 5 by an amount of

1.5. The commands to find the confidence interval in R are the

following:

> a <- 5

> s <- 2

> n <- 20

> error <- qt(0.975,df=n-1)*s/sqrt(n)

> left <- a-error

> right <- a+error

> left

[1] 4.063971

> right

[1] 5.936029

The number of observations is large enough that the results are quite

close to those in the example using the normal distribution. Next we

find the t-scores for the left and right values assuming that the true

mean is 5+1.5=6.5:

> assumed <- a + 1.5

> tleft <- (left-assumed)/(s/sqrt(n))

> tright <- (right-assumed)/(s/sqrt(n))

> p <- pt(tright,df=n-1)-pt(tleft,df=n-1)

> p

[1] 0.1112583

The probability that we make a type II error if the true mean is 6.5

is approximately 11.1%. So the power of the test is 1-p:

In this example, the power of the test is approximately 88.9%. If the

true mean differs from 5 by 1.5 then the probability that we will

reject the null hypothesis is approximately 88.9%. Note that the power

calculated for a normal distribution is slightly higher than for this

one calculated with the t-distribution.

Another way to approximate the power is to make use of the

non-centrality parameter. The idea is that you give it the critical t

scores and the amount that the mean would be shifted if the alternate

mean were the true mean. This is the method that most books recommend.

> ncp <- 1.5/, .toc-backref(s/sqrt(n))

> t <- qt(0.975,df=n-1)

> pt(t,df=n-1,ncp=ncp)-pt(-t,df=n-1,ncp=ncp)

[1] 0.1111522

> 1-(pt(t,df=n-1,ncp=ncp)-pt(-t,df=n-1,ncp=ncp))

[1] 0.8888478

Again, we see that the probability of making a type II error is

approximately 11.1%, and the power is approximately 88.9%. Note that

this is slightly different than the previous calculation but is still

close.

Finally, there is one more command that we explore. This command

allows us to do the same power calculation as above but with a single

command.

> power.t.test(n=n,delta=1.5,sd=s,sig.level=0.05,

type="one.sample",alternative="two.sided",strict = TRUE)

One-sample t test power calculation

n = 20

delta = 1.5

sd = 2

sig.level = 0.05

power = 0.8888478

alternative = two.sided

This is a powerful command that can do much more than just calculate

the power of a test. For example it can also be used to calculate the

number of observations necessary to achieve a given power. For more

information check out the help page, help(power.t.test).

Suppose that you want to find the powers for many tests. This is a

common task and most software packages will allow you to do this. Here

we see how it can be done in R. We use the exact same cases as in the

previous chapter.

Here we assume that we want to do a two-sided hypothesis test for a

number of comparisons and want to find the power of the tests to

detect a 1 point difference in the means. In particular we will look

at three hypothesis tests. All are of the following form:

\[\begin{split}H_o: \mu_1 - \mu2 & = & 0,\end{split}\]\[\begin{split}H_a: \mu_1 - \mu_2 & \neq & 0,\end{split}\]

We have three different sets of comparisons to make:

| Comparison 1 |

|

|

| |

Mean |

Std. Dev. |

Number

(pop.) |

| Group I |

10 |

3 |

300 |

| Group II |

10.5 |

2.5 |

230 |

| Comparison 2 |

|

|

| |

Mean |

Std.

Dev. |

Number

(pop.) |

| Group I |

12 |

4 |

210 |

| Group II |

13 |

5.3 |

340 |

| Comparison 3 |

|

|

| |

Mean |

Std.

Dev. |

Number

(pop.) |

| Group I |

30 |

4.5 |

420 |

| Group II |

28.5 |

3 |

400 |

For each of these comparisons we want to calculate the power of the

test. For each comparison there are two groups. We will refer to group

one as the group whose results are in the first row of each comparison

above. We will refer to group two as the group whose results are in

the second row of each comparison above. Before we can do that we must

first compute a standard error and a t-score. We will find general

formulae which is necessary in order to do all three calculations at

once.

We assume that the means for the first group are defined in a variable

called m1. The means for the second group are defined in a variable

called m2. The standard deviations for the first group are in a

variable called sd1. The standard deviations for the second group are

in a variable called sd2. The number of samples for the first group

are in a variable called num1. Finally, the number of samples for the

second group are in a variable called num2.

With these definitions the standard error is the square root of

(sd1^2)/num1+(sd2^2)/num2. The R commands to do this can be found

below:

> m1 <- c(10,12,30)

> m2 <- c(10.5,13,28.5)

> sd1 <- c(3,4,4.5)

> sd2 <- c(2.5,5.3,3)

> num1 <- c(300,210,420)

> num2 <- c(230,340,400)

> se <- sqrt(sd1*sd1/num1+sd2*sd2/num2)

To see the values just type in the variable name on a line alone:

> m1

[1] 10 12 30

> m2

[1] 10.5 13.0 28.5

> sd1

[1] 3.0 4.0 4.5

> sd2

[1] 2.5 5.3 3.0

> num1

[1] 300 210 420

> num2

[1] 230 340 400

> se

[1] 0.2391107 0.3985074 0.2659216

Now we need to define the confidence interval around the assumed

differences. Just as in the case of finding the p values in previous

chapter we have to use the pmin command to get the number of degrees

of freedom. In this case the null hypotheses are for a difference of

zero, and we use a 95% confidence interval:

> left <- qt(0.025,df=pmin(num1,num2)-1)*se

> right <- -left

> left

[1] -0.4711382 -0.7856092 -0.5227825

> right

[1] 0.4711382 0.7856092 0.5227825

We can now calculate the power of the one sided test. Assuming a true

mean of 1 we can calculate the t-scores associated with both the left

and right variables:

> tl <- (left-1)/se

> tr <- (right-1)/se

> tl

[1] -6.152541 -4.480743 -5.726434

> tr

[1] -2.2117865 -0.5379844 -1.7945799

> probII <- pt(tr,df=pmin(num1,num2)-1) -

pt(tl,df=pmin(num1,num2)-1)

> probII

[1] 0.01398479 0.29557399 0.03673874

> power <- 1-probII

> power

[1] 0.9860152 0.7044260 0.9632613

The results from the command above should give you the p-values for a

two-sided test. It is left as an exercise how to find the p-values for

a one-sided test.

Just as was found above there is more than one way to calculate the

power. We also include the method using the non-central parameter

which is recommen, .toc-backrefded over the previous method:

> t <- qt(0.975,df=pmin(num1,num2)-1)

> t

[1] 1.970377 1.971379 1.965927

> ncp <- (1)/se

> pt(t,df=pmin(num1,num2)-1,ncp=ncp)-pt(-t,df=pmin(num1,num2)-1,ncp=ncp)

[1] 0.01374112 0.29533455 0.03660842

> 1-(pt(t,df=pmin(num1,num2)-1,ncp=ncp)-pt(-t,df=pmin(num1,num2)-1,ncp=ncp))

[1] 0.9862589 0.7046655 0.9633916

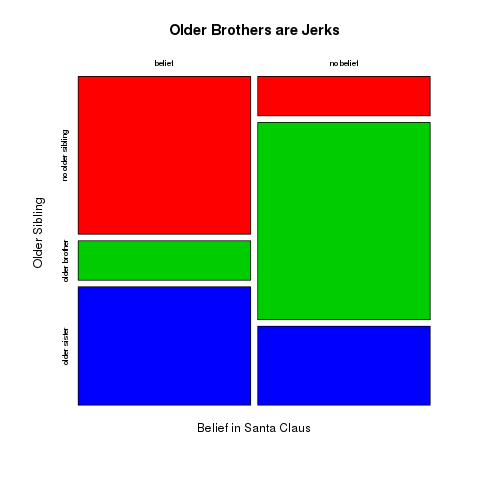

12. Two Way Tables

Here we look at some examples of how t, .toc-backrefo work with two way tables. We

assume that you can enter data and understand the different data

types.

We first look at how to create a table from raw data. Here we use a

fictitious data set, smoker.csv. This data set

was created only to be used as an example, and the numbers were

created to match an example from a text book, p. 629 of the 4th

edition of Moore and McCabe’s Introduction to the Practice of

Statistics. You should look at the data set in a spreadsheet to see

how it is entered. The information is ordered in a way to make it

easier to figure out what information is in the data.

The idea is that 356 people have been polled on their smoking status

(Smoke) and their socioeconomic status (SES). For each person it was

determined whether or not they are current smokers, former smokers, or

have never smoked. Also, for each person their socioeconomic status

was determined (low, middle, or high). The data file contains only two

columns, and when read R interprets them both as factors:

> smokerData <- read.csv(file='smoker.csv',sep=',',header=T)

> summary(smokerData)

Smoke SES

current:116 High :211

former :141 Low : 93

never : 99 Middle: 52

You can create a two way table of occurrences using the table command

and the two columns in the data frame:

> smoke <- table(smokerData$Smoke,smokerData$SES)

> smoke

High Low Middle

, .toc-backref current 51 43 22

former 92 28 21

never 68 22 9

In this example, there are 51 people who are current smokers and are

in the high SES. Note that it is assumed that the two lists given in

the table command are both factors. (More information on this is

available in the chapter on data types.)

Sometimes you are given data in the form of a table and would like to

create a table. Here we examine how to create the table

directly. Unfortunately, this is not as direct a method as might be

desired. Here we create an array of numbers, specify the row and

column names, and then convert it to a table.

In the example below we will create a table identical to the one given

above. In that example we have 3 columns, and the numbers are

specified by going across each row from top to bottom. We need to

specify the data and the number of rows:

> smoke <- matrix(c(51,43,22,92,28,21,68,22,9),ncol=3,byrow=TRUE)

> colnames(smoke) <- c("High","Low","Middle")

> rownames(smoke) <- c("current","former","never")

> smoke <- as.table(smoke)

> smoke

High Low Middle

current 51 43 22

former 92 28 21

never 68 22 9

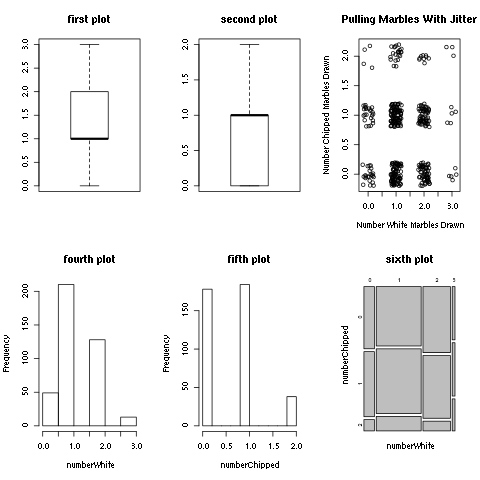

The plot command will automatically produce a mosaic plot if its

primary argument is a table. Alternatively, you can call the

mosaicplot command directly.

> smokerData <- read.csv(file='smoker.csv',sep=',',header=T)

> smoke <- table(smokerData$Smoke,smokerData$SES)

> mosaicplot(smoke)

> help(mosaicplot)

>

The mosaicplot command takes many of the same arguments for

annotating a plot:

> mosaicplot(smoke,main="Smokers",xlab="Status",ylab="Economic Class")

>

If you wish to switch which side (horizontal versus vertical) to

determine the primary proportion then you can use the sort

option. This can , .toc-backrefbe used to switch whether the width or height is used

for the first proportional length:

> mosaicplot(smoke,main="Smokers",xlab="Status",ylab="Economic Class")

> mosaicplot(smoke,sort=c(2,1))

>

Finally if you wish to switch which side is used for the vertical and

horzintal axis you can use the dir option:

> mosaicplot(smoke,main="Smokers",xlab="Status",ylab="Economic Class")

> mosaicplot(smoke,dir=c("v","h"))

>

13. Data Management

Here we look at some common tasks that come up when dealing with

data. These tasks range from assembling different data sets into more

convenient forms and ways to apply functions to different parts of the

data sets. The topics in this section demonstrate some of the power of

R, but it may not be clear at first. The functions are commonly used

in a wide variety of circumstances for a number of different

reasons. These tools have saved me a great deal of time and effort in

circumstances that I would not have predicted in advance.

The important thing to note, though, is that this section is called

“Data Management.” It is not called “Data Manipulation.”

Politicians “manipulate” data, we “manage” them.

When you have more than one set of data you may want to bring them

together. You can bring different data sets together by appending as

rows (rbind) or by appending as columns (cbind). The first example

shows how this done with two data frames. The arguments to the

functions can take any number of objects. We only use two here to keep

the demonstration simpler, but additional data frames can be appended

in the same call. It is important to note that when you bring things

together as rows the names of the objects within the data frame must

be the same.

> a <- data.frame(one=c( 0, 1, 2),two=c("a","a","b"))

> b <- data.frame(one=c(10,11,12),two=c("c","c","d"))

> a

one two

1 0 a

2 1 a

3 2 b

> b

one two

1 10 c

2 11 c

3 12 d

> v <- rbind(a,b)

> typeof(v)

[1] "list"

> v

one two

1 0 a

2 1 a

3 2 b

4 10 c

5 11 c

6 12 d

> w <- cbind(a,b)

> typeof(w)

[1] "list"

> w

one two one two

1 0 a 10 c

2 1 a 11 c

3 2 b 12 d

> names(w) = c("one","two","three","four")

> w

one two three four

1 0 a 10 c

, .toc-backref2 1 a 11 c

3 2 b 12 d

The same commands also work with vectors and matrices and behave in a similar manner.

> A = matrix(c( 1, 2, 3, 4, 5, 6),ncol=3,byrow=TRUE)

> A

[,1] [,2] [,3]

[1,] 1 2 3

[2,] 4 5 6

> B = matrix(c(10,20,30,40,50,60),ncol=3,byrow=TRUE)

> B

[,1] [,2] [,3]

[1,] 10 20 30

[2,] 40 50 60

> V <- rbind(A,B)

> typeof(V)

[1] "double"

> V

[,1] [,2] [,3]

[1,] 1 2 3

[2,] 4 5 6

[3,] 10 20 30

[4,] 40 50 60

> W <- cbind(A,B)

> typeof(W)

[1] "double"

> W

[,1] [,2] [,3] [,4] [,5] [,6]

[1,] 1 2 3 10 20 30

[2,] 4 5 6 40 50 60

The various apply functions can be an invaluable tool when trying to

work with subsets within a data set. The different versions of the

apply commands are used to take a function and have the function

perform an operation on each part of the data. There are a wide

variety of these commands, but we only look at two sets of them. The

first set, lapply and sapply, is used to apply a function to every

element in a list. The second one, tapply, is used to apply a

function on each set broken up by a given set of factors.

13.2.1. Operations on Lists and Vectors

First, the lapply command is used to take a list of items and

perform some function on each member of the list. That is, the list

includes a number of different objects. You want to perform some

operation on every object within the list. You can use lapply to

tell R to go through each item in the list and perform the desired

action on each item.

In the following example a list is created with three elements. The

first is a randomly generated set of numbers with a normal

distribution. The second is a randomly generated set of numbers with

an exponential distribution. The last is a set of factors. A summary

is then performed on each element in the list.

> x <- list(a=rnorm(200,mean=1,sd=10),

b=rexp(300,10.0),

c=as.factor(c("a","b","b","b","c","c")))

> lapply(x,summary)

$a

Min. 1st Qu. Median Mean 3rd Qu. Max.

-26.65000 -6.91200 -0.39250 0.09478 6.86700 32.00000

$b

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.0001497 0.0242300 0.0633300 0.0895400 0.1266000 0.7160000

$c

a b c

1 3 2

The lapply command returns a list. The entries in the list have the

same names as the entries in the list that is passed to it. The values

of each entry are the results from applying the function. The sapply

function is similar, but the difference is that it tries to turn

the result into a vector or matrix if possible. If it does not make

sense then it returns a list just like the lapply command.

> x <- list(a=rnorm(8,mean=1,sd=10),b=rexp(10,10.0))

> x

$a

[1] -0.3881426 6.2910959 13.0265859 -1.5296377 6.9285984 -28.3050569

[7] 11.9119731 -7.6036997

$b

[1] 0.212689007 0.081818395 0.222462531 0.181424705 0.168476454 0.002924134

[7] 0.007010114 0.016301837 0.081291728 0.055426055

> val <- lapply(x,mean)

> typeof(val)

[1] "list"

> val

$a

[1] 0.04146456

$b

[1] 0.1029825

> val$a

[1] 0.04146456

> val$b

[1] 0.1029825

>

>

> other <- sapply(x,mean)

> typeof(other)

[1] "double"

> other

a b

0.04146456 0.10298250

> other[1]

a

0.04146456

> other[2]

b

0.1029825

13.2.2. Operations By Factors

Another widely used variant of the apply functions is the tapply

function. The tapply function will take a list of data, usually a vector, a list of factors of the same list, and a function. It will then apply the function to each subset of the data that matches each of the factors.

> val <- data.frame(a=c(1,2,10,20,5,50),

b=as.factor(c("a","a","b","b","a","b")))

> val

a b

1 1 a

2 2 a

3 10 b

4 20 b

5 5 a

6 50 b

> result <- tapply(val$a,val$b,mean)

> typeof(result)

[1] "double"

> result

a b

2.666667 26.666667

> result[1]

a

2.666667

, .toc-backref> result[2]

b

26.66667

> result <- tapply(val$a,val$b,summary)

> typeof(result)

[1] "list"

> result

$a

Min. 1st Qu. Median Mean 3rd Qu. Max.

1.000 1.500 2.000 2.667 3.500 5.000

$b

Min. 1st Qu. Median Mean 3rd Qu. Max.

10.00 15.00 20.00 26.67 35.00 50.00

> result$a

Min. 1st Qu. Median Mean 3rd Qu. Max.

1.000 1.500 2.000 2.667 3.500 5.000

> result$b

Min. 1st Qu. Median Mean 3rd Qu. Max.

10.00 15.00 20.00 26.67 35.00 50.00

14. Time Data Types

The time data types are broken out into a separate section from the

introductory section on data types. (Basic Data Types) The reason

for this is that dealing with time data can be subtle and must be done

carefully because the data type can be cast in a variety of different

ways. It is not an introductory topic, and if not done well can scare

off the normal people.

I will first go over the basic time data types and then explore the

different kinds of operations that are done with the time data

types. Please be cautious with time data and read the complete

description including the caveats. There are some common mistakes that

result in calculations that yield results that are very different from

the intended values.

There are a variety of different types specific to time data fields

in R. Here we only look at two, the POSIXct and POSIXlt data types:

POSIXct

The POSIXct data type is the number of seconds since the start

of January 1, 1970. Negative numbers represent the number of seconds

before this time, and positive numbers represent the number of

seconds afterwards.

POSIXlt

The POSIXlt data type is a vector, and the entries in the vector

have the following meanings:

- seconds

- minutes

- hours

- day of month (1-31)

- month of the year (0-11)

- years since 1900

- day of the week (0-6 where 0 represents Sunday)

- day of the year (0-365)

- Daylight savings indicator (positive if it is daylight savings)

Part of the difficulty with time data types is that R prints them out

in a way that is different from how it stores them internally. This

can make type conversions tricky, and you have to be careful and test

your operations to insure that R is doing what you think it is doing.

To get the current time, the Sys.time() can be used, and you can

play around a bit with the basic types to get a feel for what R is

doing. The as.POSIXct and as.POSIXlt commands are used to

convert the time value into the different formats.

> help(DateTimeClasses)

> t <- Sys.time()

> typeof(t)

[1] "double"

> t

[1] "2014-01-23 14:28:21 EST"

> print(t)

[1] "2014-01-23 14:28:21 EST"

> cat(t,"\n")

1390505301

> c <- as.POSIXct(t)

> typeof(c)

[1] "double"

> print(c)

[1] "2014-01-23 14:28:21 EST"

> cat(c,"\n")

1390505301

>

>

> l <- as.POSIXlt(t)

> l

[1] "2014-01-23 14:28:21 EST"

> typeof(l)

[1] "list"

> print(l)

[1] "2014-01-23 14:28:21 EST"

> cat(l,"\n")

Error in cat(list(...), file, sep, fill, labels, append) :

argument 1 (type 'list') cannot be handled by 'cat'

> names(l)

NULL

> l[[1]]

[1] 21.01023

> l[[2]]

[1] 28

> l[[3]]

[1] 14

> l[[4]]

[1] 23

> l[[5]]

[1] 0

> l[[6]]

[1] 114

> l[[7]]

[1] 4

> l[[8]]

[1] 22

> l[[9]]

[1] 0

>

> b <- as.POSIXct(l)

> cat(b,"\n")

1390505301

There are times when you have a time data type and want to convert it

into a string so it can be saved into a file to be read by another

application. The strftime command is used to take a time data type

and convert it to a string. You must supply an additional format

string to let R what format you want to use. See the help page on

strftime to get detailed information about the format string.

> help(strftime)

>

> t <- Sys.time()

> cat(t,"\n")

1390506463

> timeStamp <- strftime(t,"%Y-%m-%d %H:%M:%S")

> timeStamp

[1] "2014-01-23 14:47:43"

> typeof(timeStamp)

[1] "character"

Commonly a time stamp is saved in a data file, and it must be

converted into a time data type to allow for calculations. For

example, you may be interested in how much time has elapsed between

two observations. The strptime command is used to take a string and

convert it into a time data type. Like strftime it requires a format

string in addition to the time stamp.

The strptime command is used to take a string and convert it into a

form that R can use for calculations. In the following example a data

frame is defined that has the dates stored as strings. If you read the

data in from a csv file this is how R will keep track of the

data. Note that in this context the strings are assumed to represent

ordinal data, and R will assume that the data field is a set of

factors. You have to use the strptime command to convert it into a

time field.

> myData <- data.frame(time=c("2014-01-23 14:28:21","2014-01-23 14:28:55",

"2014-01-23 14:29:02","2014-01-23 14:31:18"),

speed=c(2.0,2.2,3.4,5.5))

> myData

time speed

1 2014-01-23 14:28:21 2.0

2 2014-01-23 14:28:55 2.2

3 2014-01-23 14:29:02 3.4

4 2014-01-23 14:31:18 5.5

> summary(myData)

time speed

2014-01-23 14:28:21:1 Min. :2.000

2014-01-23 14:28:55:1 1st Qu.:2.150

2014-01-23 14:29:02:1 Median :2.800

2014-01-23 14:31:18:1 Mean :3.275

3rd Qu.:3.925

Max. :5.500

> myData$time[1]

[1] 2014-01-23 14:28:21

4 Levels: 2014-01-23 14:28:21 2014-01-23 14:28:55 ... 2014-01-23 14:31:18

> typeof(myData$time[1])

[1] "integer"

>

>

> myData$time <- strptime(myData$time,"%Y-%m-%d %H:%M:%S")

> myData

time speed

1 2014-01-23 14:28:21 2.0

2 2014-01-23 14:28:55 2.2

3 2014-01-23 14:29:02 3.4

4 2014-01-23 14:31:18 5.5

> myData$time[1]

[1] "2014-01-23 14:28:21"

> typeof(myData$time[1])

[1] "list"

> myData$time[1][[2]]

[1] 28

Now you can perform operations on the fields. For example you can

determine the time between observations. (Please see the notes below

on time operations. This example is a bit misleading!)

> N = length(myData$time)

> myData$time[2:N] - myData$time[1:(N-1)]

Time differences in secs

[1] 34 7 136

attr(,"tzone")

[1] ""

In addition to the time data types R also has a date data type. The

difference is that the date data type keeps track of numbers of days

rather than seconds. You can cast a string into a date type using the

as.Date function. The as.Date function takes the same arguments as

the time data types discussed above.

> theDates <- c("1 jan 2012","1 jan 2013","1 jan 2014")

> dateFields <- as.Date(theDates,"%d %b %Y")

> typeof(dateFields)

[1] "double"

> dateFields

[1] "2012-01-01" "2013-01-01" "2014-01-01"

> N <- length(dateFields)

> diff <- dateFields[1:(N-1)] - dateFields[2:N]

> diff

Time differences in days

[1] -366 -365

You can also define a date in terms of the number days after another

date using the origin option.

> infamy <- as.Date(-179,origin="1942-06-04")

> infamy

[1] "1941-12-07"

>

> today <- Sys.Date()

> today

[1] "2014-01-23"

> today-infamy

Time difference of 26345 days

Finally, a nice function to know about and use is the format

command. It can be used in a wide variety of situations, and not just

for dates. It is helpful for dates, though, because you can use it in

cat and print statements to make sure that your output is in

exactly the form that you want.

> theTime <- Sys.time()

> theTime

[1] "2014-01-23 16:15:05 EST"

> a <- rexp(1,0.1)

> a

[1] 7.432072

> cat("At about",format(theTime,"%H:%M"),"the time between occurances was around",format(a,digits=3),"seconds\n")

At about 16:15 the time between occurances was around 7.43 seconds

The most difficult part of dealing with time data can be converting it

into the right format. Once a time or date is stored in R’s internal

format then a number of basic operations are available. The thing to

keep in mind, though, is that the units you get after an operation can

vary depending on the magnitude of the time values. Be very careful

when dealing with time operations and vigorously test your codes.

> now <- Sys.time()

> now

[1] "2014-01-23 16:31:00 EST"

> now-60

[1] "2014-01-23 16:30:00 EST"

>

> earlier <- strptime("2000-01-01 00:00:00","%Y-%m-%d %H:%M:%S")

> later <- strptime("2000-01-01 00:00:20","%Y-%m-%d %H:%M:%S")

> later-earlier

Time difference of 20 secs

> as.double(later-earlier)

[1] 20

>

> earlier <- strptime("2000-01-01 00:00:00","%Y-%m-%d %H:%M:%S")

> later <- strptime("2000-01-01 01:00:00","%Y-%m-%d %H:%M:%S")

> later-earlier

Time difference of 1 hours

> as.double(later-earlier)

[1] 1

>

> up <- as.Date("1961-08-13")

> down <- as.Date("1989-11-9")

> down-up

Time difference of 10315 days

The two examples involving the variables earlier and later in the

previous code sample should cause you a little concern. The value of

the difference depends on the largest units with respect to the

difference! The issue is that when you subtract dates R uses the

equivalent of the difftime command. We need to know how this

operates to reduce the ambiguity when comparing times.

> help(difftime)

>

> earlier <- strptime("2000-01-01 00:00:00","%Y-%m-%d %H:%M:%S")

> later <- strptime("2000-01-01 01:00:00","%Y-%m-%d %H:%M:%S")

> difftime(later,earlier)

Time difference of 1 hours

> difftime(later,earlier,units="secs")

Time difference of 3600 secs

One thing to be careful about difftime is that it is a double

precision number, but it has units attached to it. This can be tricky,

and you should be careful about the ambiguity in using this command. I

personally always try to specify the units to avoid this.

> earlier <- strptime("2000-01-01 00:00:00","%Y-%m-%d %H:%M:%S")

> later <- strptime("2000-01-01 00:00:20","%Y-%m-%d %H:%M:%S")

> d <- difftime(later,earlier)

> d

Time difference of 20 secs

> typeof(d)

[1] "double"

> as.double(d)

[1] 20

Another way to define a time difference is to use the as.difftime

command. It takes two dates and will compute the difference between

them. It takes a time, its format, and the units to use. Note that in

the following example R is able to figure out what the units are when

making the calculation.

> diff <- as.difftime("00:30:00","%H:%M:%S",units="hour")

> diff

Time difference of 0.5 hours

> Sys.time()

[1] "2014-01-23 16:45:39 EST"

> Sys.time()+diff

[1] "2014-01-23 17:15:41 EST"

The last thing to mention is that once a time stamp is cast into one

of R’s internal formats comparisons can be made in a natural way.

> diff <- as.difftime("00:30:00","%H:%M:%S",units="hour")

> now <- Sys.time()

> later <- now + diff

> now

, .toc-backref[1] "2014-01-23 16:47:48 EST"

> later

[1] "2014-01-23 17:17:48 EST"

>

> if(now < later)

{

cat("there you go\n")

}

there you go

15. Introduction to Programming

We look at running commands from a source file. We also include an

overview of the different statements that are used for control-flow

that determines which code is executed by the interpreter.

In the next section the ways to execute the commands in a file using

the source command are given. The remaining sections are used to

list the various flow control options that are available in the R

language definition. The language definition has a wide variety of

control functions which can be found using the help command.

A set of R commands can be saved in a file and then executed as if you

had typed them in from the command line. The source command is used

to read the file and execute the commands in the same sequence given

in the file.

> source('file.R')

> help(source)

>

If you simply source the file the commands are not printed, and the

results of commands are not printed. This can be overridden using the

echo, print.eval, and verbose options.

Some examples are given assuming that a file, simpleEx.R, is in the

current directory. The file is given below:

# Define a variable.

x <- rnorm(10)

# calculate the mean of x and print out the results.

mux = mean(x)

cat("The mean of x is ",mean(x),"\n")

# print out a summary of the results

summary(x)

cat("The summary of x is \n",summary(x),"\n")

print(summary(x))

The file also demonstrates the use of # to specify

comments. Anything after the # is ignored. Also, the file

demonstrates the use of cat and print to send results to the

standard output. Note that the commands have options to send results

to a file. Use help for more information.

The output for the different options can be found below:

> source('simpleEx.R')

The mean of x is -0.4817475

The summary of x is

-2.24 -0.5342 -0.2862 -0.4817 -0.1973 0.4259

Min. 1st Qu. Median Mean 3rd Qu. Max.

-2.2400 -0.5342 -0.2862 -0.4817 -0.1973 0.4259

>

>

>

> source('simpleEx.R',echo=TRUE)

Min. 1st Qu. Median Mean 3rd Qu. Max.

-2.32600 -0.69140 -0.06772 -0.13540 0.46820 1.69600

>

>

>

> source('simpleEx.R',print.eval=TRUE)

The mean of x is 0.1230581

Min. 1st Qu. Median Mean 3rd Qu. Max.

-1.7020 -0.2833 0.1174 0.1231 0.9103 1.2220

The summary of x is

-1.702 -0.2833 0.1174 0.1231 0.9103 1.222

Min. 1st Qu. Median Mean 3rd Qu. Max.

-1.7020 -0.2833 0.1174 0.1231 0.9103 1.2220

>

>

>

> source('simpleEx.R',print.eval=FALSE)

The mean of x is 0.6279428

The summary of x is

-0.7334 -0.164 0.9335 0.6279 1.23 1.604

Min. 1st Qu. Median Mean 3rd Qu. Max.

-0.7334 -0.1640 0.9335 0.6279 1.2300 1.6040

>

>

>

>

> source('simpleEx.R',verbose=TRUE)

'envir' chosen:<environment: R_GlobalEnv>

encoding = "native.enc" chosen

--> parsed 6 expressions; now eval(.)ing them:

>>>> eval(expression_nr. 1 )

=================

> # Define a variable.

> x <- rnorm(10)

curr.fun: symbol <-

.. after ‘expression(x <- rnorm(10))’

>>>> eval(expression_nr. 2 )

=================

> # calculate the mean of x and print out the results.

> mux = mean(x)

curr.fun: symbol =

.. after ‘expression(mux = mean(x))’

>>>> eval(expression_nr. 3 )

=================

> cat("The mean of x is ",mean(x),"\n")

The mean of x is -0.1090932

curr.fun: symbol cat

.. after ‘expression(cat("The mean of x is ",mean(x),"\n"))’

>>>> eval(expression_nr. 4 )

=================

> # print out a summary of the results

> summary(x)

curr.fun: symbol summary

Min. 1st Qu. Median Mean 3rd Qu. Max.

-1.3820 -1.0550 -0.1995 -0.1091 0.6813 2.1050

.. after ‘expression(summary(x))’

>>>> eval(expression_nr. 5 )

=================

> cat("The summary of x is \n",summary(x),&q, .toc-backrefuot;\n")

The summary of x is

-1.382 -1.055 -0.1995 -0.1091 0.6813 2.105

curr.fun: symbol cat

.. after ‘expression(cat("The summary of x is \n",summary(x),"\n"))’

>>>> eval(expression_nr. 6 )

=================

> print(summary(x))

Min. 1st Qu. Median Mean 3rd Qu. Max.

-1.3820 -1.0550 -0.1995 -0.1091 0.6813 2.1050

curr.fun: symbol print

.. after ‘expression(print(summary(x)))’

One common problem that occurs is that R may not know where to find a

file.

> source('notThere.R')

Error in file(filename, "r", encoding = encoding) :

cannot open the connection

In addition: Warning message:

In file(filename, "r", encoding = encoding) :

cannot open file 'notThere.R': No such file or directory

R will search the current working directory. You can see what files

are in the directory using the dir command, and you can determine

the current directory using the getwd command.

> getwd()

[1] "/home/black/public_html/tutorial/R/rst/source/R"

> dir()

[1] "plotting.rData" "power.R" "shadedRegion.R"

You can change the current directory, and the options available depend

on how you are using R. For example on a Windows PC or a Macintosh you

can use the menu options to change the working directory. You can

choose the directory using a graphical file browser. Otherwise, you

can change to the correct directory before running R or use the

setwd command.

Conditional execution is available using the if statement and the

corresponding else statement.

> x = 0.1

> if( x < 0.2)

{

x <- x + 1

cat("increment that number!\n")

}

, .toc-backrefincrement that number!

> x

[1] 1.1

The else statement can be used to specify an alternate option. In the

example below note that the else statement must be on the same line

as the ending brace for the previous if block.

> x = 2.0

> if ( x < 0.2)

{

x <- x + 1

cat("increment that number!\n")

} else

{

x <- x - 1

cat("nah, make it smaller.\n");

}

nah, make it smaller.

> x

[1] 1

Finally, the if statements can be chained together for multiple

options. The if statement is considered a single code block, so more

if statements can be added after the else.

> x = 1.0

> if ( x < 0.2)

{

x <- x + 1

cat("increment that number!\n")

} else if ( x < 2.0)

{

x <- 2.0*x

cat("not big enough!\n")

} else

{

x <- x - 1

, .toc-backref cat("nah, make it smaller.\n");

}

not big enough!

> x

[1] 2

The argument to the if statement is a logical expression. A full

list of logical operators can be found in the types document focusing

on logical variables (Logical).

The for loop can be used to repeat a set of instructions, and it is

used when you know in advance the values that the loop variable will

have each time it goes through the loop. The basic format for the

for loop is for(var in seq) expr

An example is given below:

> for (lupe in seq(0,1,by=0.3))

{

cat(lupe,"\n");

}

0

0.3

0.6

0.9

>

> x <- c(1,2,4,8,16)

> for (loop in x)

{

cat("value of loop: ",loop,"\n");

}

value of loop: 1

value of loop: 2

value of loop: 4

value of loop: 8

value of loop: 16

See the section on breaks for more options (break and next statements)

The while loop can be used to repeat a set of instructions, and, .toc-backref it

is often used when you do not know in advance how often the

instructions will be executed. The basic format for a while loop is

while(cond) expr

>

> lupe <- 1;

> x <- 1

> while(x < 4)

{

x <- rnorm(1,mean=2,sd=3)

cat("trying this value: ",x," (",lupe," times in loop)\n");

lupe <- lupe + 1

}

trying this value: -4.163169 ( 1 times in loop)

trying this value: 3.061946 ( 2 times in loop)

trying this value: 2.10693 ( 3 times in loop)

trying this value: -2.06527 ( 4 times in loop)

trying this value: 0.8873237 ( 5 times in loop)

trying this value: 3.145076 ( 6 times in loop)

trying this value: 4.504809 ( 7 times in loop)

See the section on breaks for more options (break and next statements)

The repeat loop is similar to the while loop. The difference is

that it will always begin the loop the first time. The while loop

will only start the loop if the condition is true the first time it is

evaluated. Another difference is that you have to explicitly specify

when to stop the loop using the break command.

That is you need to execute the break statement to get out of the

loop.

> repeat

{

x <- rnorm(1)

if(x < -2.0) break

}

> x

[1] -2.300532

See the section on breaks for more options (break and next statements)

The break statement is used to stop the execution of the current

loop. It will break out of the current loop. The next statement is

used to skip the statements that follow and restart the current

loop. If a for loop is used then the next statement will update

the loop variable.

> x <- rnorm(5)

> x

[1] 1.41699338 2.28086759 -0.01571884 0.56578443 0.60400784

> for(lupe in x)

{

if (lupe > 2.0)

next

if( (lupe<0.6) && (lupe > 0.5))

break

cat("The value of lupe is ",lupe,"\n");

}

The value of lupe is 1.416993

The value of lupe is -0.01571884

The switch takes an expression and returns a value in a list based

on the value of the expression. How it does this depends on the data

type of the expression. The basic syntax is switch(statement,item1,item2,item3,...,itemN).

If the result of the expression is a number then it returns the item

in the list with the same index. Note that the expression is cast as

an integer if it is not an integer.

> x <- as.integer(2)

> x

[1] 2

> z = switch(x,1,2,3,4,5)

> z

[1] 2

> x <- 3.5

> z = switch(x,1,2,3,4,5)

> z

[1] 3

If the result of the expression is a string, then the list of items

should be in the form “valueN”=resultN, and the statement will

return the result that matches the value.

> y <- rnorm(5)

> y

[1] 0.4218635 -0.8205637 -1.0191267 -0.6080061 -0.6079133

> x <- "sd"

> z <- switch(x,"mean"=mean(y),"median"=median(y),"variance"=var(y),"sd"=sd(y))

> z

[1] 0.5571847

> x <- "median"

> z <- switch(x,"mean"=mean(y),"median"=median(y),"variance"=var(y),"sd"=sd(y))

> z

[1] -0.6080061

The command to read input from the keyboard is the scan

statement. It has a wide variety of options and can be fine tuned to

your specific needs. We only look at the basics here. The scan

statement waits for input from a user, and it returns the value that

was typed in.

When using the command with no set number of lines the command will

continue to read keyboard input until a blank line is entered.

> help(scan)

> a <- scan(what=double(0))

1: 3.5

2:

Read 1 item

> a

[1] 3.5

> typeof(a)

[1] "double"

>

> a <- scan(what=double(0))

1: yo!

1:

Error in scan(file, what, nmax, sep, dec, quote, skip, nlines, na.strings, :

scan() expected 'a real', got 'yo!'

If you wish to only have it read from a fixed number of lines the

nmax option can specify how many lines can be typed in, and the

multi.line option can be used to turn off multi-line entry.

> a <- scan(what=double(0),nmax=1,multi.line = FALSE)

1: 6.7

Read 1 item

> a

[1] 6.7

A shallow overview of defining functions is given here. A few

subtleties will be noted, but R can be a little quirky with respect to

defining functions. The first bit of oddness is that you can think of

a function as an object where you define the function and assign it to

a variable name.

To define a function you assign it to a name, and the keyword

function is used to denote the start of the function and its

argument list.

> newDef <- function(a,b)

{

x = runif(10,a,b)

mean(x)

}

> newDef(-1,1)

[1] 0.06177728

> newDef

function(a,b)

{

x = runif(10,a,b)

mean(x)

}

The last expression in the function is what is returned. So in the

example above the sample mean of the numbers is returned.

> x <- newDef(0,1)

> x

[1] 0.4800442

The arguments that are passed are matched in order. They can be

specified explicitly, though.

> newDef(b=10,a=1)

[1] 4.747509

> newDef(10,1)

[1] NaN

Warning message:

In runif(10, a, b) : NAs produced

You can mix this approach, and R will try to match up the named

arguments and then match the rest going from left to right. Another

bit of weirdness is that R will not evaluate an expression in the

argument list until the moment it is needed in the function. This is a

different kind of behavior than what most people are used to, so be

very careful about this. The best rule of thumb is to not put in

operations in an argument list if they matter after the function is

called.

Another common task is to have a function return multiple items. This

can be accomplished by returning a list of items. The objects within

a list can be accessed using the same $ notation that is used for

data frames.

> c = c(1,2,3,4,5)

> sample <- function(a,b)

{

value = switch(a,"median"=median(b),"mean"=mean(b),"variance"=var(b))

largeVals = length(c[c>1])

list(stat=value,number=largeVals)

}

> result <- sample("median",c)

> result

$stat

[1] 3

$number

[1] 4

> result$stat

[1] 3

> result$number

[1] 4

There is another potential problem that can occur when using a

function in R. When it comes to determining the value of a variable

there is a path that R will use to search for its value. In the case

of functions if a previously undefined variable appears R will look at

the argument list for the function. Next it will look in the current

work space. If you are not careful R will find the value some place

where you do not expect it, and your function will return a value that

is not correct, and no error will be given. Be very careful about the

names of variables especially when using functions.

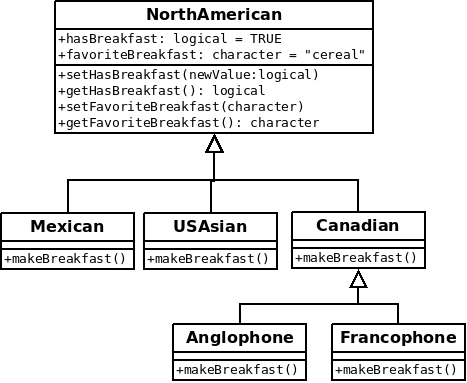

Object Oriented Programming

There are at least three different approaches to object oriented programming in R. We examine two of them, the S3 and S4 classes. The other approach makes use of a package, and we focus instead on the two built in classes.

1. S3 Classes

We examine how to create S3 classes. It is assumed that you are familiar with the basic data types and scripting (Introduction to Programming).

First, everything in R is treated like as an object. We have seen this with functions. Many of the objects that are created within an R session have attributes associated with them. One common attribute associated with an object is its class.

You can set the class attribute using the class command. One thing to notice is that the class is a vector which allows an object to inherit from multiple classes, and it allows you to specify the order of inheritance for complex classes. You can also use the class command to determine the classes associated with an object.

> bubba <- c(1,2,3)

> bubba

[1] 1 2 3

>

> class(bubba)

[1] "numeric"

>

> class(bubba) <- append(class(bubba),"Flamboyancy")

> class(bubba)

[1] "numeric" "Flamboyancy"

Note

A new command, append, is used here. The first argument is a vector, and the function adds the following arguments to the end of the vector.

One way to define a method for a class is to use the UseMethod command to define a hierarchy of functions that will react appropriately. The UseMethod command will tell R to look for a function whose prefix matches the current function, and it searches for a suffix in order from the vector of class names. In other words a set of functions can be defined, and the function that is actually called will be determined by the class name of the first object in the list of arguments.

You first have, .toc-backref to define a generic function to reserve the function name. The UseMethod command is then used to tell the R system to search for a different function. The search is based on the name of the function and the names of an object’s classes. The name of the functions have two parts separated by a ”.” where the prefix is the function name and the suffix is the name of a class.

That is a lot of verbiage 罗嗦 to describe a relatively simple idea. A very basic example is given below:

> bubba <- list(first="one", second="two", third="third")

> class(bubba) <- append(class(bubba),"Flamboyancy")

>

> bubba

$first

[1] "one"

$second

[1] "two"

$third

[1] "third"

attr(,"class")

[1] "list" "Flamboyancy"

>

> GetFirst <- function(x)

+ {

+ UseMethod("GetFirst",x)

+ }

>

> GetFirst.Flamboyancy <- function(x)

+ {

+ return(x$first)

+ }

>

> GetFirst(bubba)

[1] "one"

The plethora of object oriented approaches leads to a natural

question. Which one should you use? With respect to S3 and S4 classes,

the S3 class is more flexible, and the S4 class is a more structured

approach. This is a nice way of saying that the S3 class approach is